Remote facilitating and presenting can feel especially daunting. Most of the challenges of in-person workshop facilitation and presentation are simply exacerbated by remote scenarios; however the right tool selection and additional planning can mitigate many of these challenges.

Tips for Remote Facilitating and Presenting

- Turn on your camera: Showing your face can help establish rapport and trust with participants and help them see you as a real person, not just a voice. This sense of connection can be critical when you are seeking buy-in or attempting to guide a group to consensus about UX or design decisions.

- Enable connection: Plan for additional time in the agenda for relationship building with a digital icebreaker, especially if participants do not know each other. For virtual UX workshops, help participants engage with each other throughout the session by encouraging everyone to respond to each other using people’s names and making use of breakout groups, polling, and chat.

- Create ground rules: At the beginning of the session, share ground rules that will help mitigate the inevitable communication challenges of digital meetings. These rules might include asking participants to agree to state their names before speaking, not speak over anyone, and avoid multitasking.

- Assign homework: Provide participants short homework assignments that allow them to practice using the technology before the session. For example, if you plan on using a virtual whiteboard application during a design workshop, you could ask participants to create an artifact to introduce themselves using that same application before the workshop, and have them share it as an icebreaker at the beginning of the workshop.

- Adapt the structure: Resist the urge to take an existing workshop structure presentation format and simply reuse it for a remote session. Think thoughtfully about how to transition activities, slides, and content to a virtual format. This includes modifying workshop agendas and presentation timelines to accommodate for technology inconveniences and additional activities that allow participants to connect and engage.

Tools for Remote Facilitating and Presenting

- Presenting UX work: Zoom, GoToMeeting, and Google Hangouts Meet are a few of the many reliable video-conferencing platforms. When selecting a platform, consider which, if any, specific features you’ll need (e.g., breakout rooms, autorecord, gallery view), and be mindful of any limitations of free versions. (For example, the free version of Zoom caps meetings with more than two attendees at 40 minutes—not something you’d want to realize for the first time in the middle of a presentation about your latest design recommendations!)

- Generative workshop activities: If your goal is to generate a large amount of ideas or other contributions, use tools that make it easy to quickly add an item to a list or virtual whiteboard. Google Draw, Microsoft Visio, Sketch, MURAL, and Miro are a few examples that might work for this context.

- Evaluative workshop activities: If your goal is to group or prioritize ideas or contributions, consider platforms with built-in prioritization matrices such as MURAL or Miro. Alternatively, use survey tools such as SurveyMonkey or CrowdSignal, or live polling apps such as Poll Everywhere that you can insert directly into your slides.

Source: NN/g

When it comes to conducting user research, presenting UX work, or collaborating with other team members or stakeholders, we know that face-to-face interaction can have a lot of advantages: It’s easier for participants to build trust and rapport in person than remotely, and attendees are likely to pay attention and be cooperative for longer.

However, we can’t always conduct UX activities face to face. Sometimes, budget or time limitations, travel restrictions, or other unforeseen circumstances make in-person sessions impossible, unaffordable, or even unsafe. In these cases, remote sessions can offer immense value in maintaining the flow of insights and ideas.

Additionally, remote UX sessions offer many benefits that in-person sessions do not, regardless of the circumstances:

- Flexibility in project funds: Remote sessions lower travel expenses. This reduction might free up monetary resources for other valuable activities, such as recruiting additional research participants, doing extra rounds of research, or providing deeper analysis.

- Increased inclusiveness: Location and space are no longer limitations with remote sessions. For user research, this means access to a more diverse group of participants to whom you might not have access locally. For UX workshops and presentations, it means nonlocal colleagues can easily attend and more people in general can participate. (However, it doesn’t mean that everyone should attend. Continue to limit attendance to relevant roles.)

- Attendee convenience: Participants might be more willing and able to attend remote sessions that don’t require them to leave their office or home. They’ll save the commute time (whether that’s a couple of hours driving to a research facility or a few days traveling to a workshop in another city), and they can participate from the comfort of their own space. Especially with remote, unmoderated research or remote, asynchronous ideation, participants can complete tasks on their own schedule.

Given these advantages, remote UX work can be a useful solution to many project challenges. This article provides guidelines and resources for transitioning 3 types of common UX activities to the digital sphere:

- Conducting user research

- Facilitating and presenting

- Collaborating and brainstorming

Remote User Research

Generally, we recommend in-person usability testing and user interviews whenever possible. It’s simply easier to catch and read participant body language and recognize which breaks in dialogue are appropriate times to probe or ask followup questions. However, remote testing is preferable to no testing at all, and remote user research can accelerate insights on tight timelines and budgets.

Tips for Remote User Research

- Practice using the technology: Even if you are familiar with the tool you’ll be using, do a practice run with a colleague or friend. Particularly for remote, unmoderated sessions, make sure instructions for signing in and completing tasks are clear. Plan initial pilot testing with a few users so you can adjust technology and other factors as needed, before launching the study.

- Recruit additional users: With remote, unmoderated research, you can’t help if a handful of remote interviews or usability tests are rendered useless due to unsolvable surprise technology issues on the user’s end, such as firewalls. Recruit more users than you think you need in order to create a proactive safety net.

- Plan for technology challenges: Technology mishaps will occur. Assume technology challenges will happen, and don’t panic when they do. Have a backup plan ready, such as a phone dial-in in addition to a web link for user interviews and, whenever possible, use a platform that doesn’t require participants to download anything to join the session.

- Provide instructions: If the technology tool is complex or users will be setting it up and using it over an extended time, create documentation specific to the features you’ll ask them to use. For example, for our digital diary studies using shared Evernote notebooks, we provide detailed documentation for participants on how to set up and use the platform.

- Adjust consent forms: If you’ll be recording the participant’s face, voice, or screen while conducting a remote session, update your consent form to ask for explicit permission for each of these items. If you plan on asking additional researchers to join the session or if you’ll share recordings among the team during postsession analysis, outline and ask for consent on these items, as well.

Tools for Remote User Research

Consider tools that match the needs of your study, and, as always, match appropriate research methods to your study goals. Remote research is certainly better than no research at all; however, invalid insights are not helpful in any situation.

- Remote, unmoderated sessions: Tools such as Lookback, dscout, and Userbrain help capture qualitative insights from video recordings and think-aloud narration from users. Tools such as Koncept App and Maze capture quantitative metrics such as time spent and success rate. Many platforms have both qualitative and quantitative capabilities, such as UserZoom and UserTesting. (Be sure to check whether these tools work well with mobile applications, as needed.)

- Remote, moderated sessions: Any video conferencing platform that has screensharing, call recording and the ability to schedule meetings in advance is likely to meet the needs of most teams. Zoom, GoToMeeting, and Google Hangouts Meet are frequently used. (Remember to consider platforms that do not require participants to download anything to join the meeting.)

Source: NN/g

A remote working structure can present difficulties for any team, in any field. For those in the UX field, it can be especially challenging. As we have recently transitioned (although temporary) to a complete remote working environment, we'd like to take some pointers from a company with a successful 100% remote structure, NN/g. Here are some specific challenges and recommendations which they've provided.

Challenges of Remote UX Work

Working remotely is a challenge for any team, but it can be especially difficult in the field of UX. NN/g has provided some specific challenges and recommendations, based on their successful 100%-remote structure.

Ideation and Collaboration

UX design often requires collaboration to generate ideas. In-person ideation workshops are much easier than the remote equivalent. When ideation participants are in the same room together, they have a shared context — it’s easy to see what they’re sketching on a sheet of paper and what sticky notes they’re posting on the walls. Also, when people can see each other face to face, the flow of information and emotion is easier to assess. If you’ve ever been on a conference call and rolled your eyes at a coworker, or awkwardly started talking at the same time as someone else, you’ll know the difference.

In a previous role at a different company, we had chronic problems with ineffective remote meetings — often, one or two people would dominate the conversation, while the rest would lurk without contributing.

A simple fix can avoid some of those issues: share webcam video as well as audio as much as possible. Just by being able to see the other people in the meeting, you’ll:

- Encourage empathy and bonding

- Be able to see when someone is about to speak

- Assess body language and expressions to better perceive your coworkers’ emotions

You’ll likely get more engagement from participants if they’re on video as well: it’s obvious when someone has mentally checked out of the meeting or is busy sending unrelated emails.

At NN/g we often use multiple tools to achieve a smooth remote ideation workshop. For example, we might share webcam feeds and audio using Zoom or GoToMeeting, while we share ideas visually using a collaborative product like Google Docs, Miro, or Mural.

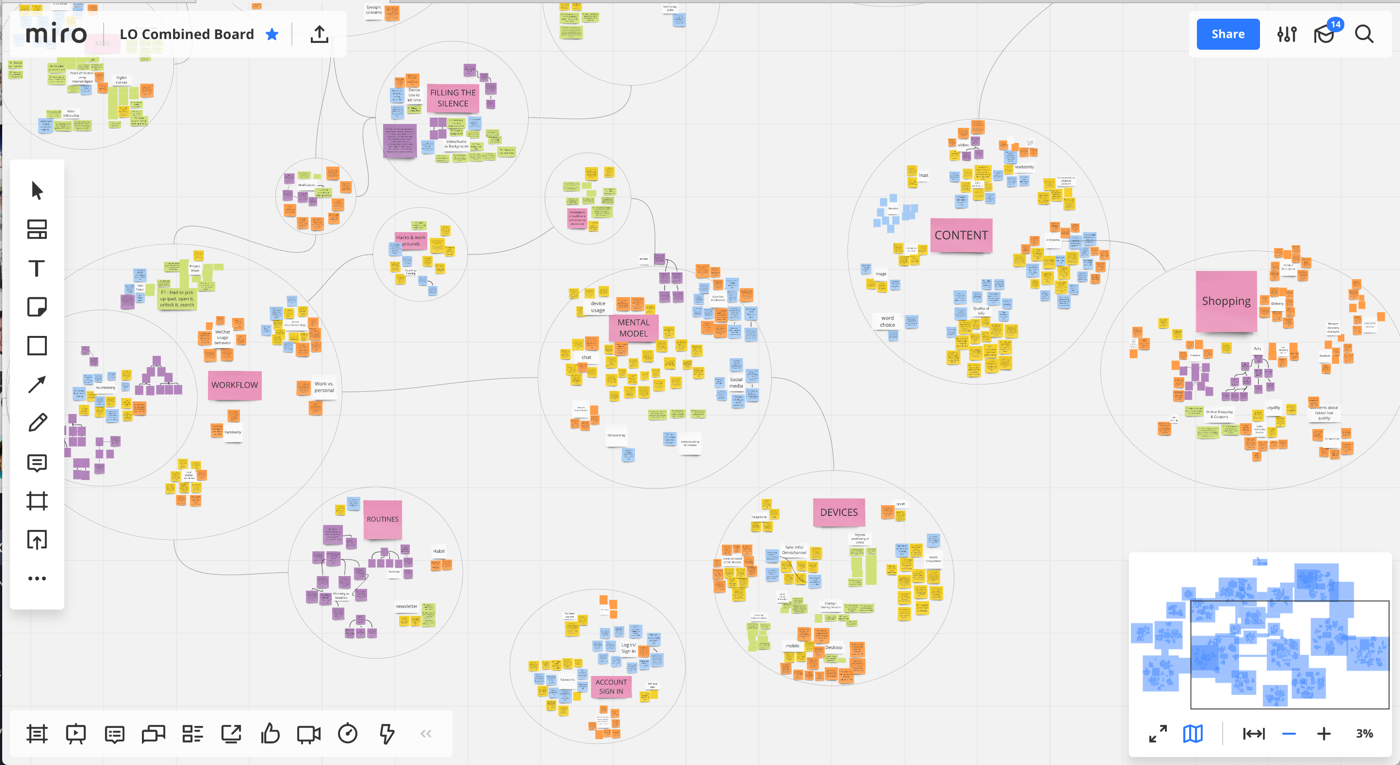

This screenshot shows the results of a remote affinity-diagramming exercise. In a video-conferencing meeting we used Miro, a whiteboard application, to cluster the findings from our Life Online research project and generate ideas for future articles and projects.

We tailor the tools to fit the needs of a particular meeting. For example, creating a journey map remotely may require different digital products than an ideation workshop.

Other remote teams have found success by using document cameras: each person in the meeting shares her video and audio streams, as well as her document camera that captures what she’s writing or drawing.

Team Building

When you don’t see your coworkers every day, it’s easy to slip into feeling disconnected from them.

We’ve found that having a company Slack workspace alleviates this feeling. In addition to having project- and topic-specific channels, we also have:

- #nng-pets: For sharing photos of our animal office mates

- #the-office-today: For sharing photos of where you happen to be working that day (a coffee shop, out on your deck, by a pool, etc.)

- #feedback: For requesting quick input on anything you’re currently working on (an idea, a graphic, or an excerpt of writing)

Source: NN/g

What is a Content Audit?

The word “audit” can strike fear into the most stoic of hearts. A Content Audit, however, is nothing to be feared. It is simply the process of taking an inventory of the content on your website. It is a large, detailed list of the information on your website and most often is in the form of a spreadsheet. This list will be the basis for the re-organization of your site.

There are three types of audits: Full, Partial and Sample. A Full audit is just as it sounds; a complete accounting of every page, file and media. A Partial audit’s parameters are less specific. However it will include every section of your site as well as a subset, which can be the top few levels of a hierarchical site or the last few months of articles written. A Sample audit is a compilation of example content.

Why Conduct a Content Audit?

We’ve all gone through our closets at some point in our lives to determine what we have, what we need, what we should keep and what we should throw away. We essentially performed an “audit” of our wardrobe and walked away with a newly reorganized closet, a feeling of accomplishment and a great excuse to go shopping. Auditing the content of the closet known as your website will provide you with a similar result for you and your users.

It allows you to know exactly what information you have on your site, what information you need on your site, what pages you need to keep and what pages are duplicative and can be “thrown away”. In addition it provides you with the information to address potential user pain points as well as opportunities for added value to your user. When you have completed your audit, you will now possess the information platform to create a newly streamlined and re-organized information architecture for your site, a better understanding of your content and great reason to get innovative in the solutions you provide your users.

How to Conduct a Content Audit?

There are different ways that you can audit the content of your website and there are different pieces of data that you can include.

The main recommended categories are:

- Navigation Title (main link to the content)

- Page Name

- URL (web address)

- Comments (any notes you’d like to remember)

- Content Hierarchy (how the content relates to each other).

Other suggested categories are:

- Content Type (page, blog post, media, attachment, article, etc.)

- Entry Points (how the user gets to that information)

- Last Date Updated

- Secure/Open (is the page open to all or secure?)

In order to conduct a successful Content Audit , use the following steps:

- Start with a blank spreadsheet. List your categories in the first row and your main pages or section in the first column

- Choose one page and list the information according to the categories

- If there are sub-pages, create a separate row for each under the main page/section and gather the same information for those as well

- Repeat these steps until every page is listed.

- Using this spreadsheet, analyze to determine where the opportunities and pain points are.

Key Tip #3 for UX success from Agile Practitioners

Soft skills can hold the keys to success in Agile projects. The respondents identified healthy collaboration as a main factor in success. This finding is not surprising; after all, in the Agile Manifesto, individuals and interactions are valued over processes and tools. Good communication is essential in any software-development organization, whatever its process methodology. However, collaboration is even more important in Agile settings, where delivery times are short and time-boxed.

Some organizations chose design-thinking techniques such as ideation and brainstorming to encourage discussion and tear down silos that often block effective communication and teamwork.

“Collaboration has been critical.”

“Close collaboration with other roles in the team has [helped us] arrive at agreements sooner in the process.”

“Constant ongoing collaboration with all team members. We use sketching and whiteboard sessions; journey-experience maps help capture the omnichannel experience.”

“Share info together in cross-functional teams. Communicate more with developer and designers.”

“No putdowns and no dismissal of ideas in early stages.”

“Involve everyone on the team and welcome everyone's suggestions and ideas.”

“Keep the relationship between the business analyst, designer, and engineer close.”

“Meet regularly once a week to update and know the progress. Focus on things to help one another accomplish the work.”

“Daily standups, iteration demos, biweekly pulse meetings, and interaction with management.”

In modern software-development environments, UX is heavily involved in defining how online products and services are developed. As such, the role of UX has expanded to include communication. UX can be the catalyst for good collaboration by involving team members in activities such as usability testing, and field studies, but also group design ideation and brainstorming.

Source: NN/g

What are Quality Metrics?

Quality Metrics are the measurement by which you can determine if you are meeting your goal of providing your users with useful and pleasant experience. These metrics demonstrate what your values and priorities are and ideally on what is essential to your user.

Why Use Quality Metrics?

In your effort to provide excellent user experience, you will implement changes to your product or service. The only way to determine if these changes are effective is by measuring the results. Quality metrics provide you with the platform to measure the results of your previous process with your improved process. After which you can determine if the changes moved you closer to your goal and what further improvements are necessary. Ultimately Pearson's Law applies: "That which is measured improves. That which is measured and reported improves exponentially."

How to Create Quality Metrics

Quality Metrics are established during the planning phase. Before you can improve a process, you have to outline what is “improvement.” This outline will serve as the basis from which you create your metrics. You can use your Feedback Loop to collect and analyze the information based on your metrics. It is vital that you establish metrics based on the following guidelines:

- Your metrics should be quantifiable: You are looking for results such as “85% of clients are satisfied” rather than “most clients are satisfied.”

- Your metrics should be actionable: Be sure to create actionable metrics, such as customer satisfaction or ease of use.

- Your metrics should be trackable over time: Construct your metrics in such a way that you can determine if the quality is improving.

- Your metrics should be maintained and updated regularly: Be sure to test your metrics consistently and to adjust them as your product or service changes.

- Your metrics are tied to your goals: Make sure your metrics focus on what is essential to your user and will drive the success of your product or service.

ISG Playbook

ISG Playbook APIs

APIs